Have you ever wondered how computers communicate and process information? Behind the sleek screens and sophisticated software lies a simple yet powerful foundation—the binary system of 0s and 1s. In this article, we will delve into the world of 0s and 1s in computing nyt, exploring their significance, applications, and future prospects.

Introduction

At the core of digital systems, the binary code plays a pivotal role in representing and manipulating data. The binary code, composed of only two digits—0 and 1—forms the basis of all digital communication and computation. Understanding the fundamental concepts of 0s and 1s is crucial for comprehending the inner workings of modern technology.

Binary Code

The binary code is a numerical system that utilizes two symbols, 0 and 1, to represent data. Unlike the decimal system used in everyday life, which employs ten digits (0 to 9), the binary system simplifies things by using only two digits. This binary representation of data allows computers to process information in a more efficient and reliable manner.

In digital systems, the presence of an electrical signal is represented by 1, while the absence of a signal is denoted by 0. By using various combinations of 0s and 1s, computers can represent complex data, execute calculations, and perform tasks that drive the modern world.

Binary Number System

The binary number system is a positional numeral system that uses only two digits—0 and 1—to represent numbers. Unlike the decimal system, where each digit’s position represents a power of ten, in the binary system, each digit represents a power of two. For example, the binary number 1011 represents the decimal number 11.

While the binary number system may seem unfamiliar at first, it forms the backbone of digital systems, allowing computers to store, process, and transmit numerical data with ease. The binary system’s simplicity and compatibility with electronic circuits make it an ideal choice for digital communication and computation.

Binary Operations

In computer programming, binary operations play a crucial role in manipulating data and executing logical operations. The three primary binary operations are logical AND, OR, and XOR (exclusive OR).

The logical AND operation returns 1 if both input bits are 1; otherwise, it returns 0. The logical OR operation, on the other hand, returns 1 if at least one input bit is 1. Lastly, the XOR operation returns 1 if the input bits are different and 0 if they are the same.

These binary operations serve as the building blocks for complex algorithms and decision-making processes in programming languages. Understanding how 0s and 1s interact through these logical operations is essential for efficient programming and problem-solving.

ASCII Code

The American Standard Code for Information Interchange (ASCII) is a widely used character encoding standard that assigns numeric values to characters. By utilizing a 7-bit binary code, ASCII represents a total of 128 characters, including uppercase and lowercase letters, digits, punctuation marks, and control characters.

In the ASCII code, each character is assigned a unique sequence of 0s and 1s, allowing computers to represent and process textual information. For example, the capital letter ‘A’ is represented by the binary code 01000001. This binary representation forms the basis for character encoding in various programming languages and communication protocols.

Binary Storage

As computers handle vast amounts of data, efficient storage mechanisms are crucial. Binary storage, utilizing 0s and 1s, provides a compact and reliable solution for storing and retrieving information.

At the lowest level, computers store data as individual bits—either 0 or 1. These bits are grouped together to form larger units of storage, such as bytes, kilobytes, megabytes, and beyond. By efficiently organizing and addressing these binary storage units, computers can store and retrieve vast amounts of data in a structured manner.

Digital Images and Videos

Digital images and videos rely on binary representation to capture and display visual information. Images are composed of pixels, where each pixel represents a tiny dot of color. Each pixel’s color is defined using a combination of 0s and 1s, representing the intensity of red, green, and blue (RGB) channels.

Videos, on the other hand, consist of a sequence of images displayed rapidly. Each frame of a video is encoded using binary representation, capturing motion and visual information over time. By relying on the binary representation of pixels, computers can process, transmit, and display images and videos with remarkable precision.

Error Detection and Correction

In the realm of data communication and storage, error detection and correction are crucial for maintaining data integrity. By utilizing binary representation, computers employ error detection techniques such as parity checks and checksums.

In these techniques, 0s and 1s are used to perform calculations that ensure data integrity. By adding additional bits or applying algorithms based on 0s and 1s, computers can detect and correct errors that may occur during data transmission or storage.

The Future of 0s and 1s

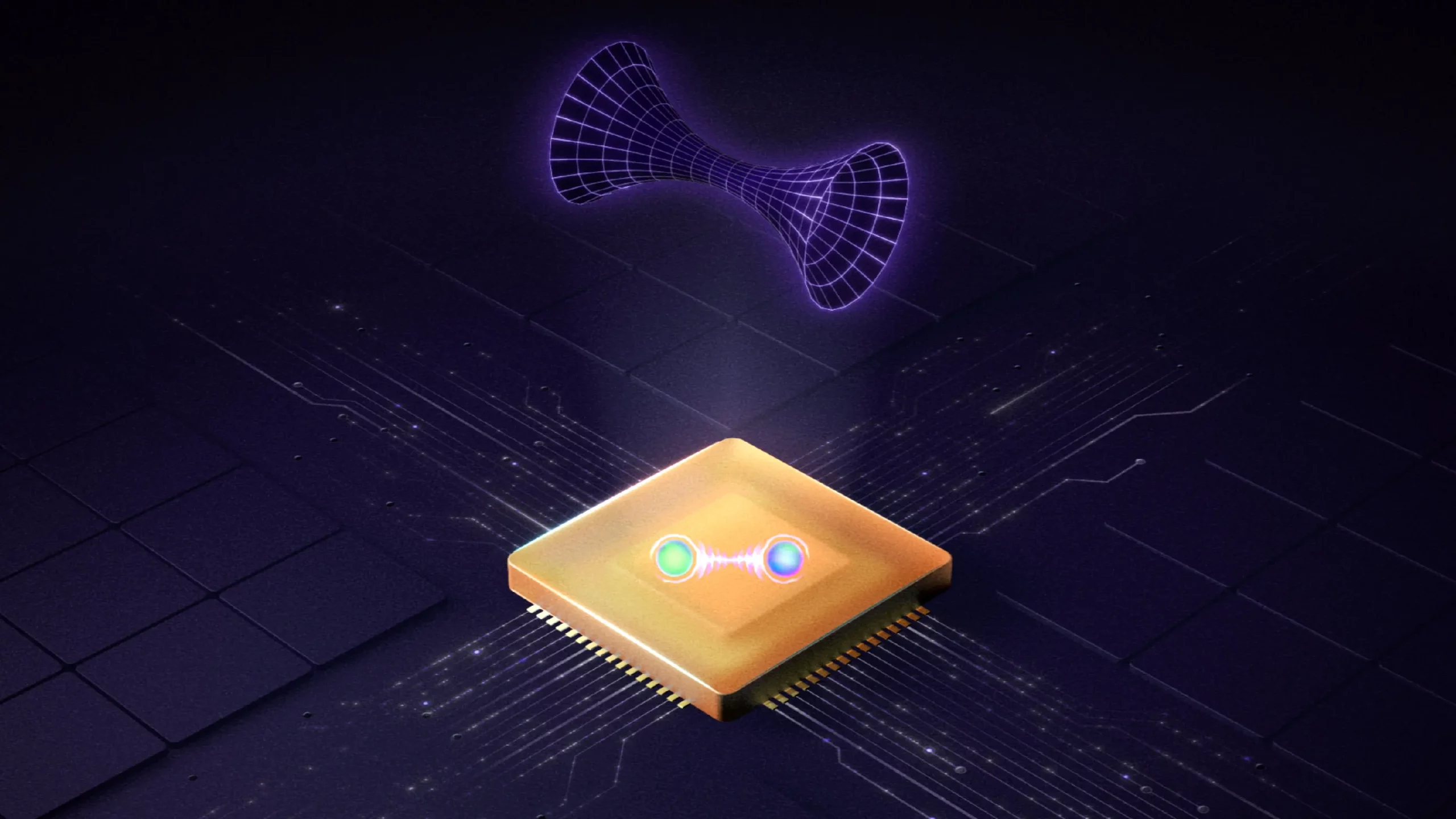

As technology continues to advance, the importance of 0s and 1s in computing shows no signs of diminishing. Emerging technologies like quantum computing and artificial intelligence rely heavily on binary-based systems for their operations.

Quantum computers, leveraging the principles of quantum mechanics, utilize qubits—units of quantum information. Similar to classical computers, qubits can represent two states: 0 and 1. However, due to the nature of quantum mechanics, qubits can also exist in a superposition of both states simultaneously, opening up new possibilities for computation and data processing.

Artificial intelligence, with its vast datasets and complex algorithms, relies on binary representation for processing and storing information. Neural networks, the foundation of AI, utilize matrices of 0s and 1s to perform intricate calculations and pattern recognition tasks. As AI continues to evolve, so too will the significance of 0s and 1s in this field.

Conclusion

In the digital age, the humble 0s and 1s form the bedrock of computing. From binary code and the binary number system to binary operations and storage mechanisms, 0s and 1s enable computers to communicate, process data, and execute complex tasks. Their importance in representing characters, images, and videos, as well as detecting and correcting errors, cannot be overstated. As technology advances, we can expect 0s and 1s to continue shaping the future of computing and driving innovation.